What you can tell without even reading the Mueller Report: 13 ideas for investigating documents.

Governments and businesses the world over need to get ahead of this game and realise that all of their communication, statements, videos, advertisements, are easily and instantly analysed before a document even needs to be read.

There were armies of lawyers, journalists and armchair enthusiasts waiting to dismantle this report, (the formal name of which is "Report on the Investigation into Russian Interference in the 2016 Presidential Election").

<<< I am aware that a new version of the PDF was published 22nd April, see note in footer>>>

Analysis

The obvious lack of care with such a significant document reveals a lot about the state of governmental use of technology. Millions of people unable to access it, huge strain on servers and a perfect opportunity for scammers to infect people with Malware.

It is quite clear that there were also many, if not all, of the pages printed out and re-scanned again. Perhaps because of the fear of another document with botched redactions?

Forgetting the quality or accessibility of the PDF or the lack of effective scaling for an audience of many, many millions (although that's annoying), these are some of the techniques available to analyse the content and structure of a document before issuing a significant report to wider scrutiny.

1. Good old fashioned crowd-sourcing

People, assigned to review and flag content, can still be a useful and quick resource to decide on and judge the content of a document. Run each paragraph through a four-eyes review, flagging it for sentiment, accuracy, quality etc, using your own defined rules.

Many journalists still focus on a mass of people to break apart a document and read each paragraph or page. That way they can get to the story quicker...! Couple this with some decent planning, document review tools and textual analytics and 400 page document can be digested and summarised in minutes.

2. Remove metadata.

The report was created at 6:23pm on the 17th, on a RICOH MPC6502. That information is available just with a 'right-click' on the PDF to view properties. Just with this simple information we can tell that this document was still being worked at the last hour. Years of effort and there is still work to be done the day before it’s released...

Off the back of that information, a quick Google search finds that Ricoh supply the DoJ, and that it's likely the DoJ also use Remoix, the Ricoh Cloud Service. Printers are notoriously a target for hackers given their 'IoT' level of security. Information left in a document like this can therefore lead to later unintended issues.

Isn't it also surprising this information is public? Which happens to be the case for most organisations IT systems... PRIME hacker material.

3. Check formats and compression of pages

I can see that page number 0142 (Page 135 of Vol I) is in a fax compression (like a tiff image) and black and white. The rest of the report in JPG.

There might be quite a number of reasons for this, but the most likely one is that the page was re-scanned with different settings, perhaps after a ‘double feed’ on the scanner, with two pages going through in one.

The raw images are all landscape and were rotated to create the final PDF.

It seems to be the only "different" image, you will note the absence of the poor quality yellow streak that's present on all of the other pages. Could it have been inserted after the main scanning exercise of the printed document?

The presence of this yellow line (look closely, it is there..!) might be simply that the Ricoh device used in the last hours, needed a service beforehand to clean the scanner glass and pick rollers or change the toner.

It's also a common effect of printing mostly in black and white with just a little bit of colour. The horizontal nature means that the document was fed through in landscape, which is confirmed by the orientation of the raw images in the PDF. They might want to check out this link.

Different formats and quality levels in a PDF or other image-based document can lead to attention being drawn by inquisitive minds and (potentially) conclusions being reached.

4. File sizes

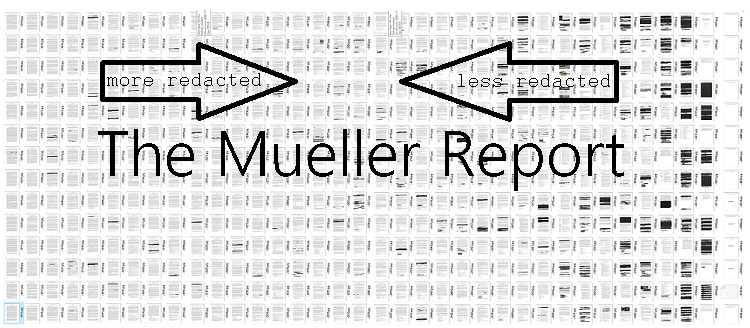

I keep banging on about this to anyone who listens in my team or the wider eDiscovery world. File sizes (and the frequency of types of file), are your friend. Take a look at this thumbnail with the raw images from the document, sorted by size.

I have already identified all the redacted pages just using Windows, sorting images by file size. I used XPDF PDFinfo to extract out the raw images in about 20 seconds.

There are 13 pages without much on them at all, shown at the bottom of the image...

Page 161 of Volume II is the most information dense page in terms of text, at the top of the image above. The most heavily redacted page is 37 (Page 30 Volume I).

Imagine receiving a PDF file and immediately getting this information without having to first read the document. Without any ‘AI’ in sight and in an instant.

Perhaps there might be a case for your document production to visually reduce the differences and even the file-size of each page somewhat, to reduce the likelihood of information being given away.

I might decide to prioritise my review of the hundreds of pages by looking at the important redacted parts first of all.. instant headlines...

5. Skew

When scanning and re-scanning using mechanical methods that scanners utilise, can result in paper being skewed.

In this example page, I can see that it's been ‘skewed’. Visually this shows a slight rotation anti-clockwise.

So what can looking in detail at all of the images tell me?

Skewness, Entropy and Kurtosis are statistical terms, regarding normal distribution (bell curve) and variation from a normal distribution.

You can ‘Wikipedia’ them, but it doesn't matter too much what they mean... it's what they can show you that's important. They can show you some of the differences and commonalities across the entire 448 pages.

It’s important to note that these statistics are about balance of information and don’t necessarily show the rotation of a page, but we can use them to see variation.

I surmise, without looking at the actual document, that it's likely it has been printed then re-scanned, as all of the pages are irregular.

Performing that analysis across all of the pages might give you a clue as to which were handled together or not, since a mechanical device usually has a pattern that it adheres to.

Of course, it could be the page was just a little crooked, but it starts to give clues about the fragmented nature of document creation. I used ImageMagick for this. Other tools are available. Minutes after downloading the document. Here is an overview of the whole document:

Page 1 through to page 448, from left to right.

There are some obvious correlations. At a high level, there might be 'something going on' with pages 1, 7, 22-30, 37, 46, 60-66, 98, 180, 185, 197, 204, 207, 211, 341, 344, 392-399, 415 and 439-441, of the PDF.

Perhaps they were redacted differently, perhaps they were inserted into the PDF afterwards, late in the day. The patterns could instantly draw an inquisitive mind.

We already know the document was still being finalised the day before the release. Some of these pages contain redaction, perhaps last minute redaction?

I didn't fill out the rest. But hopefully you can see by discerning the statistical differences we could potentially identify additional information about the each page.

Once you move into the realm of the image, you can also pick up minor blemishes in colour, borders and pixels and discover any ‘odd page out’ moments after the release of the document.

Could these pages have been treated differently somehow? Printed separately, before the whole document was scanned back in on the Ricoh? It's an easy leap to make that perhaps the document redaction exercises were performed separately. Also judging by the pixelation of the document and the accentuated skew, this document was most likely scanned twice.

Depending on the context of your particular document, you can discover many things from this type of statistical analysis. Analysing the Red, Green and Blue channels separately would give much more granular and useful information. Particularly since some of the redactions are colour coded. The two images with the highest RGB values are shown here. Page 038 and 0185.

Take a look at some other tools used for analysing PDF files in Forensics. PDF and other types of document files give away a lot of information... so it's best you are aware.

6. Extract text or OCR post redaction.

Check for redacted terms. We can't be sure if they did this, but some of the redacted portions are a little obvious because of terms that are un-redacted elsewhere in the document can lead you to make inferences. Think about analysing the sizes of the boxes, and finding if there are any commonalities between them? Those could be the same word. Then think about words that might fit into those boxes, words you might think of to try are.., the rest of the words in the document...?

It might be a lot of work, but in the example of the black hole picture, an image was made from 'common sense' pieces of a jigsaw. Which piece of the text is most likely to be correct and fit into the redaction space? It could be done, but that's beyond the scope of this article.

OCR should have been included post creation of this document, and it should have been better compressed. When adding OCR post redaction, you should always search the OCR text for any instances of redacted terms that still remain as a final safety check..

7. Base linguistics.

Obviously relevant for non-English documents, but there will be translations created and perhaps it's worth being in control of what these might look like. Perhaps translations into multiple languages, so someone else doesn't need to translate, could be a consideration before publishing such a document? Most Natural Language Processing (NLP) utilises sentence structure to analyse sentiment, topics and also to translate text into other languages

These structures make more sense that simply a one to one translation of words. With these dependencies in language, we get meaning, sentiment, intent, objects etc. More on that later. NLP helps us to understand the structure and meaning of a sentence, which can then be made to represent the same structure and meaning, but in a different language.

8. Simple word and term frequency counts.

These can result in revealing the intent of the document. Mentioning "witch hunt" many times, might tell you something about the intention of the authors. Decent Natural Language Processing can tell you what constitutes a phrase rather than just each single word, in this document it's president Trump. However the absence of words are just as telling.

Most people do this almost instantly as a matter of course, and often it's the most telling of simple analysis. In particular, looking at longer and less frequent words can reveal some interesting quick facts about the document.

Moving on from that, categorising verbs, nouns etc, can also lead to further interesting information.

9. Entities

When we move into the area of Natural Language Processing and textual analytics, we can use technology to extract Entities (names of people, places, companies, numbers, locations, dates, times etc.) The example below shows results from the introduction and executive summary. Again, performed in seconds, without having to read the document.

There is a version of this that was created by LogikCull (other tools are available). They also created an OCRd version of the document, freely available here. maybe someone else have a free one laying around? The principle is that by using sentence structure and context, the object of a sentence can be identified as a 'named entity'.

The entities can be checked again existing taxonomies and grouped accordingly. In the right most picture, you can see the category of 'Author'. This can be a simple lookup against data sets like Wikipedia or even your own corporate taxonomy.

10. Relationships

Some NLP software can link entities quite easily. So in the document, can tell you that it's likely (for instance) that someone is "owner" of something else. Believe me, decent journalists applied NLP to extract this type of information within minutes of release of the document. Even familial relationship can be extracted in this way. Donald Trump Jr, son of Donald Trump etc...

It's good to play with the demos of some of the providers of these services. For instance from the Introduction, Rosette (other tools are available) has picked up the following "citizens of Russia", again within seconds of the text being available:

11. Sentiment

Textual analytics and NLP can now estimate the intended sentiment of a sentence or paragraph with variable accuracy. With legalese it's a little more difficult, as it's generally all negative or formal in tone anyway. But again, these tools are easy to come by and can tell you a lot about the tone the document was written in. Take a look at this sample from Rosette (other tools are available), the introduction to the Mueller report is 62% positive (in a technical sense).

12. Topics

For many, many years, I have been using software that can analyse topics or 'concepts' in documents. The usual approach is to group phrases together using statistical analysis and extract out the nouns or noun phrases that are most 'prevalent' within a body of text. Another one that can be done in seconds. To the left we see the top 25 picked up from the Introduction and Executive summary of Volume I.

This was done in seconds of extracting the text from the document, by utilising the abilities of TextRazor. Many other tools are available to do this.

Once textual analytics or NLP had decided a 'concept' it will usually then place that concept into a higher level taxonomy. Taxonomies are standards that are recognised for specific topic areas. for an example, see the first ten categories detected from the “Executive Summary and Introduction for Volume I” of the Mueller report from TextRazor. This process took 5 seconds based on just the text from that chapter:

13. Semantic similarity.

Think of those 'tweet' comparison sites. The ones that tell how likely it is that someone wrote a particular tweet or not. It is feasible to detect who wrote a paragraph, or at least group likely similar writing style together, to determine the number of separate authors and how similar each paragraph is to each author. With legalese and political-ese, generally the tone is negative and formal. But with the right taxonomy and machine learning from other documents, a model could be build to automatically read AND understand a document in an instant.

Taking this to its natural course, with the convergence and acceleration of technology, it would be feasible to create a tool to ask natural language questions of a document, much like a google search.

Conclusion

I am sure there are many more techniques to identify outliers, interpret meaning and analyse a document in minutes, this is by no means an exhaustive list....

After the 30 minute download, the entire exercise including OCR, NLP, image analysis, took under an hour end-to-end, working on my own. I had all this information and understanding at my fingertips without once looking at the actual document (OK, I lie, the first thing I did was check for the redactions being permanent!)

The main message though - if you are producing a sensitive document or communication, remember that there are people waiting to dismantle and analyse it in ever smarter and quicker ways.

By breaking apart into images, pages, paragraphs, sentences, entities and sentiments etc, I can instantly identify anomalies and prioritise where I need to focus without any time wasted in attempting to read or understand the content.

This is best done before the intended (or unintended) audience gets it, with a view to what that audience might surmise from the presence or absence of information.

HTH,

Martin.

Note, quietly a new version of the same document was released on April 22nd containing the searchable text and corrected bookmarks. The images are of a lower quality still and corrected slightly in places. The OCR text is poor quality and even simple quality control has not been performed, but it is one step up from the original. By using the same analysis I am able to detect corrections in the alignment of images. Here I have overlaid page 405 of the old document with the new document. which has the most significant difference.

Disclaimer: This is all my own opinion and experience and isn't necessarily reflective of the views of my current or previous employers. It is not an endorsement of advertisement of any product and I am not affiliated with any product mentioned. It may have been written by a bot. You tell me? Will I actually read the document? Probably not.

About Martin: Over the past 17 years I've worked with Chief Legal Officers, General Counsel, Compliance Professionals and ‘Big Law’ firms globally, to create and implement systems and processes that reduce the likelihood of failure during a crisis. I can't tell jokes or write them. I am also apolitical.

Director, eDiscovery & Legal Disclosure Advisory | Incident Response | Cyber Risk Advisory EU & UK

4yA comment on the challenges of investigations: https://www.linkedin.com/feed/update/urn:li:activity:6528197621142548481

Technical Swiss Army Knife / Special Forces for your projects

4yMartin Nikel I enjoyed this. I always find it Interesting to see the types of data that hides in plain sight if you know how to find it. Well done!

Chief Executive Officer at PDF Association

4yThanks for this fantastic analysis, Martin. Our own analysis (more from the document than the textual perspective) of the Mueller Report is posted in three articles starting here: https://www.pdfa.org/a-technical-and-cultural-assessment-of-the-mueller-report-pdf/