US politicians from across both sides of the political aisle have proposed a ‘Future of AI Act‘ that will form a special committee to steer the nation’s approach to AI across multiple areas, including key legal aspects.

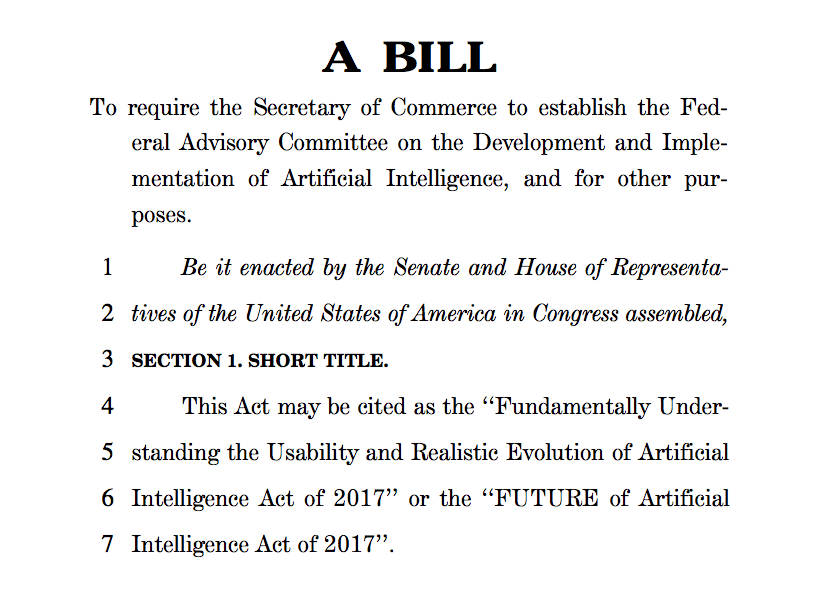

The proposed Act, or, in full, ‘Fundamentally Understanding the Usability and Realistic Evolution of Artificial Intelligence Act of 2017‘, hence the ‘FUTURE of AI Act’, will create a Federal committee of experts from within and outside of Government who will advise the Secretary of Commerce. Future laws regulating AI may then be steered by that input.

The areas of interest cover subjects such as economic impact and the competitiveness of the US economy in the future, but also will explore some thorny legal matters, which will include: ‘ethics training’ for technologists working with AI; data sharing; and ‘machine learning bias…and cultural and societal norms’.

And perhaps most importantly for lawyers, it will look at and then advise the US Government on:

‘Accountability and legal rights, including matters relating to the responsibility for any violations of laws by an artificial intelligence system and the compatibility of international regulations.’

It will also look at the need for retraining, to cope with this new wave of industrialisation of cognitive tasks across the workforce.

The bill has been supported in particular by Representatives, John Delaney (Democrat, Maryland) and Pete Olsen (Republican,Texas), who together earlier this May formed the first ‘AI Caucus‘.

At the time, Delaney said: ‘Artificial Intelligence promises to be one of the paradigm-shifting developments of the next century, with the potential to reshape our economy and daily life just as fully as the internal combustion engine or the semiconductor.’

‘As lawmakers, our choice is to either get caught flat-footed or to proactively anticipate how things will change and work on smart policies to make sure that the country benefits as much as possible overall. We have to start becoming future-focused when it comes to policy,’ he added.

Understandably, some in the wider AI community as well as those in the legal AI world, may be slightly nervous about the potential for the US Government to step in and start regulating. However, there do appear to be some opportunities to get involved, either directly or indirectly.

The committee, once formed, will include, aside from the politicians:

- Five members from the academic or research community.

- Six members from private industry, at least one of whom shall be from a small business concern.

- Six from civil society, at least two of whom shall be from groups that advocate for civil liberties or civil rights.

- Two from labour organisations or groups.

In which case, there may be opportunities for legal tech experts, legal AI company founders and legal academics to get involved, or at least communicate to the committee via these future members. That said, it would seem a good idea for the committee to include at least one member from the legal AI world, given its importance to the future development of the US economic and regulatory landscape.

There will also be opportunities to connect with the non-voting members from other Government branches and with sub-committees that may form in the future. For example, someone in the US may wish to propose that a special sub-committee on legal AI is formed, given that the legal sector represents over $450bn to the US economy.

This latest move follows similar projects by the EU as well as the UK Government and the Houses of Parliament, e.g. the APPG-AI group, to prepare for the new wave of cognitive technology that will surely impact many process-type jobs, as well as creating new opportunities and value in the economy.

Representative Delaney can be contacted here and Representative Olson can be contacted here, (local residency limitations may apply for email contact). If anyone plans to get involved, please say.

1 Trackback / Pingback

Comments are closed.